When it comes to security cameras, the end user always wants more—more resolution, more artificial intelligence (AI), and more sensors. However, the cameras themselves do not change much from generation to generation; that is, they have the same power budgets, form factors and price. To achieve “more,” the systems-on-chips (SoCs) inside the video cameras must pack more features and integrate systems that would have been separate components in the past.

For an update on the latest capabilities of SoCs inside video cameras, we turned to Jérôme Gigot, Senior Director of Marketing for AIoT at Ambarella, a manufacturer of SOCs. AIoT refers to the artificial intelligence of things, the combination of AI and IoT.

Author's quote

“The AI performance on today’s cameras matches what was typically done on a server just a generation ago,” says Gigot. “And, doing AI on-camera provides the threefold benefits of being able to run algorithms on a higher-resolution input before the video is encoded and transferred to a server, with a faster response time, and with complete privacy.”

Added features of the new SOC

Ambarella expects the first cameras with the SoC to emerge on the market during early part of 2024

Ambarella’s latest System on Chip (SOC) is the CV72S, which provides 6× the AI performance of the previous generation and supports the newer transformer neural networks. Even with its extra features, the CV72S maintains the same power envelope as the previous-generation SoCs. The CV72S is now available, sampling is underway by camera manufacturers, and Ambarella expects the first cameras with the SoC to emerge on the market during the early part of 2024.

Examples of the added features of the new SOC include image processing, video encoders, AI engines, de-warpers for fisheye lenses, general compute cores, along with functions such as processing multiple imagers on a single SoC, fusion among different types of sensors, and the list goes on. This article will summarize new AI capabilities based on information provided by Ambarella.

AI inside the cameras

Gigot says AI is by far the most in-demand feature of new security camera SoCs. Customers want to run the latest neural network architectures; run more of them in parallel to achieve more functions (e.g., identifying pedestrians while simultaneously flagging suspicious behavior); run them at higher resolutions in order to pick out objects that are farther away from the camera. And they want to do it all faster.

Most AI tasks can be split between object detection, object recognition, segmentation and higher-level “scene understanding” types of functions, he says. The latest AI engines support transformer network architectures (versus currently used convolutional neural networks). With enough AI horsepower, all objects in a scene can be uniquely identified and classified with a set of attributes, tracked across time and space, and fed into higher-level AI algorithms that can detect and flag anomalies.

However, everything depends on which scene is within the camera’s field of view. “It might be an easy task for a camera in an office corridor to track a person passing by every couple of minutes; while a ceiling camera in an airport might be looking at thousands of people, all constantly moving in different directions and carrying a wide variety of bags,” Gigot says.

Changing the configuration of video systems

Low-level AI number crunching would typically be done on camera (at the source of the data)

Even with more computing capability inside the camera, central video servers still have their place in the overall AI deployment, as they can more easily aggregate and understand information across multiple cameras. Additionally, low-level AI number crunching would typically be done on camera (at the source of the data).

However, the increasing performance capabilities of transformer neural network AI inside the camera will reduce the need for a central video server over time. Even so, a server could still be used for higher-level decisions and to provide a representation of the world; along with a user interface for the user to make sense of all the data.

Overall, AI-enabled security cameras with transformer network-based functionality will greatly reduce the use of central servers in security systems. This trend will contribute to a reduction in the greenhouse gases produced by data centers. These server farms consume a lot of energy, due to their power-hungry GPU and CPU chips, and those server processors also need to be cooled using air conditioning that emits additional greenhouse gases.

New capabilities of transformer neural networks

New kinds of AI architectures are being deployed inside cameras. Newer SoCs can accommodate the latest transformer neural networks (NNs), which now outperform currently used convolutional NNs for many vision tasks. Transformer neural networks require more AI processing power to run, compared to most convolutional NNs. Transformers are great for natural language processing (NLP) as they have mechanisms to “make sense” of a seemingly random arrangement of words. Those same properties, when applied to video, make transformers very efficient at understanding the world in 3D.

Transformer NNs require more AI processing power to run, compared to most convolutional NNs

For example, imagine a multi-imager camera where an object needs to be tracked from one camera to the next. Transformer networks are also great at focusing their attention on specific parts of the scene—just as some words are more important than others in a sentence, some parts of a scene might be more significant from a security perspective.

“I believe that we are currently just scratching the surface of what can be done with transformer networks in video security applications,” says Gigot. The first use cases are mainly for object detection and recognition. However, research in neural networks is focusing on these new transformer architectures and their applications.

Expanded use cases for multi-image and fisheye cameras

For multi-image cameras, again, the strategy is “less is more.” For example, if you need to build a multi-imager with four 4K sensors, then, in essence, you need to have four cameras in one. That means you need four imaging pipelines, four encoders, four AI engines, and four sets of CPUs to run the higher-level software and streaming. Of course, for cost, size, and power reasons, it would be extremely inefficient to have four SoCs to do all this processing.

Therefore, the latest SoCs for security need to integrate four times the performance of the last generation’s single-imager 4K cameras, in order to process four sensors on a single SoC with all the associated AI algorithms. And they need to do this within a reasonable size and power budget.

The challenge is very similar for fisheye cameras, where the SoC needs to be able to accept very high-resolution sensors (i.e., 12MP, 16MP, and higher), in order to be able to maintain high resolution after de-warping. Additionally, that same SoC must create all the virtual views needed to make one fisheye camera look like multiple physical cameras, and it has to do all of this while running the AI algorithms on every one of those virtual streams at high resolution.

The power of ‘sensor fusion’

Sensor fusion is the ability to process multiple sensor types at the same time and correlate all that information

Sensor fusion is the ability to process multiple sensor types at the same time (e.g., visual, radar, thermal, and time of flight) and correlate all that information. Performing sensor fusion provides an understanding of the world that is greater than the information that could be obtained from any one sensor type in isolation. In terms of chip design, this means that SoCs must be able to interface with, and natively process, inputs from multiple sensor types.

Additionally, they must have the AI and CPU performance required to do either object-level fusion (i.e., matching the different objects identified through the different sensors), or even deep-level fusion. This deep fusion takes the raw data from each sensor and runs AI on that unprocessed data. The result is machine-level insights that are richer than those provided by systems that must first go through an intermediate object representation.

In other words, deep fusion eliminates the information loss that comes from preprocessing each individual sensor’s data before fusing it with the data from other sensors, which is what happens in object-level fusion.

Better image quality

AI can be trained to dramatically improve the quality of images captured by camera sensors in low-light conditions, as well as high dynamic range (HDR) scenes with widely contrasting dark and light areas. Typical image sensors are very noisy at night, and AI algorithms can be trained to perform excellently at removing this noise to provide a clear color picture—even down to 0.1 lux or below. This is called neural network-based image signal processing, or AISP for short.

AI can be trained to perform all these functions with much better results than traditional video methods

Achieving high image quality under difficult lighting conditions is always a balance among removing noise, not introducing excessive motion blur, and recovering colors. AI can be trained to perform all these functions with much better results than traditional video processing methods can achieve. A key point for video security is that these types of AI algorithms do not “create” data, they just remove noise and clean up the signal. This process allows AI to provide clearer video, even in challenging lighting conditions. The results are better footage for the humans monitoring video security systems, as well as better input for the AI algorithms analyzing those systems, particularly at night and under high dynamic range conditions.

A typical example would be a camera that needs to switch to night mode (black and white) when the environmental light falls below a certain lux level. By applying these specially trained AI algorithms, that same camera would be able to stay in color mode and at full frame rate--even at night. This has many advantages, including the ability to see much farther than a typical external illuminator would normally allow, and reduced power consumption.

‘Straight to cloud’ architecture

For the cameras themselves, going to the cloud or to a video management system (VMS) might seem like it doesn’t matter, as this is all just streaming video. However, the reality is more complex; especially for cameras going directly to the cloud. When cameras stream to the cloud, there is usually a mix of local, on-camera storage and streaming, in order to save on bandwidth and cloud storage costs.

To accomplish this hybrid approach, multiple video-encoding qualities/resolutions are being produced and sent to different places at the same time; and the camera’s AI algorithms are constantly running to optimize bitrates and orchestrate those different video streams. The ability to support all these different streams, in parallel, and to encode them at the lowest bitrate possible, is usually guided by AI algorithms that are constantly analyzing the video feeds. These are just some of the key components needed to accommodate this “straight to cloud” architecture.

Keeping cybersecurity top-of-mind

Ambarella’s SoCs always implement the latest security mechanisms, both hardware and software

Ambarella’s SoCs always implement the latest security mechanisms, both in hardware and software. They accomplish this through a mix of well-known security features, such as ARM trust zones and encryption algorithms, and also by adding another layer of proprietary mechanisms with things like dynamic random access memory (DRAM) scrambling and key management policies.

“We take these measures because cybersecurity is of utmost importance when you design an SoC targeted to go into millions of security cameras across the globe,” says Gigot.

‘Eyes of the world’ – and more brains

Cameras are “the eyes of the world,” and visual sensors provide the largest portion of that information, by far, compared to other types of sensors. With AI, most security cameras now have a brain behind those eyes.

As such, security cameras have the ability to morph from just a reactive and security-focused apparatus to a global sensing infrastructure that can do everything from regulating the AC in offices based on occupancy, to detecting forest fires before anyone sees them, to following weather and world events. AI is the essential ingredient for the innovation that is bringing all those new applications to life, and hopefully leading to a safer and better world.

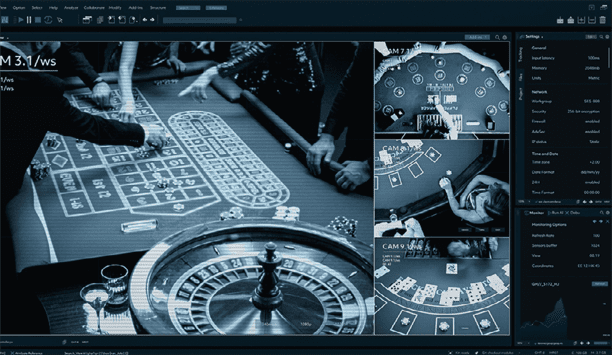

Learn why leading casinos are upgrading to smarter, faster, and more compliant systems