|

| see bigger image |

| Figure 5: Providing correct and useful results requires intersection of user intentions, VCA interpretation, and results provided by VCA tool |

The term Video Content Analysis (VCA) is often used to describe sophisticated video analytics technologies designed to assist analysts in classifying or detecting events of interest in videos. These events may include the appearance of a particular object, class of objects, or action. VCA technology employs a complex mix of algorithms, typically encompassing the fields of Computer Vision, Machine Learning, and Information Retrieval. This inherent complexity of VCA problems makes success difficult to demonstrate.

Over the past four years, System Planning Corporation, of Arlington, Virginia, has performed several technology surveys and evaluated nine VCA technologies for object classification and video search. Through discussions with potential users and detailed technical interchanges with developers, it has become clear that negative perceptions represent a significant obstacle to wider adoption of VCA technology.

Despite a number of challenges, we believe that VCA does have the potential to help solve real-world problems.

Challenge: fiction vs. reality

The complexity, robustness, and maturity of VCA technology is rapidly advancing. This, coupled with fictional portrayals of VCA technology and widespread use of narrow implementations, sometimes makes it difficult to know where reality ends and fantasy begins. What was impossible a few years ago is now commonplace.

Movies and TV shows like the CSI and NCIS franchises blur the line between fact and fiction by portraying video search capabilities that do exist, but with considerably more speed, automation, accuracy, and robustness than currently achievable. Furthermore, Licence Plate Readers (LPR) are used regularly by toll booths and parking garages, while facial recognition software can be used to log into your laptop or unlock your smart phone. Potential users therefore see the ubiquity of video and image analytics tools, but don’t necessarily appreciate the operational constraints that are necessary to make such systems work. Together, these can contribute to unrealistic expectations regarding the capabilities of state-of-the-art VCA technology.

Challenge: Agreeing on the question

Video search is inherently ambiguous due to the complexity and depth of information contained in an image. Each image chip in Figure 2 represents a potential match to the query image shown in Figure 1. Whether the chip represents a true positive (i.e. right answer) depends on the mission at hand. Stated more technically, whether a potential match is correct or not depends on the level of fidelity (i.e. precision) required; a right answer for one user may represent a wrong for another (Figure 3).

|

| Figure 1. Example of highly-ambiguous query image, in which a single image can represent many concepts |

|

| Figure 2. Range of possible matches for the query image in Figure 1. What constitutes a right answer depends on the germane features in the query image |

|

| Figure 3. Required precision depends on the mission at hand |

Imagine a law enforcement scenario involving an overnight crime in the vicinity of a low-quality CCTV security camera. Initially, investigators might use VCA to find vehicles passing though the camera’s field of view. At this stage, any detected vehicle will constitute correct answer. After viewing all vehicles in the video and correlating with other information, an investigator decides that the suspect vehicle is a silver sedan. A nearby higher-quality video source is then queried to find all silver sedans – thus any detected silver sedan will be represent a true positive. Finally, after additional investigation, the suspect vehicle is identified. Now, any subsequent searches will accept only make/model matches as right answers.

Challenge: The black box

The previous section presented examples in which search results are technically correct, yet are inconsistent with the user’s expectations and requirements. This is a challenge that is inherent in VCA algorithms. Because computer vision algorithms represent objects using numerical models (descriptors), their interpretations of an image are not readily understood by human operators. The result is that the software cannot easily ask “Is this what you meant?” to clarify features of interest. The cartoon in Figure 4 shows descriptors associated with the Histogram of Oriented Gradients (HOG) algorithm and possible resulting matches. In such a case, the only way to present the user with a choice is to provide the answers on the right, 50% of which are likely incorrect.

|

| Figure 4. Numerical descriptors affect how an object is interpreted, yet are difficult to convey to a user |

Closing the confidence gap

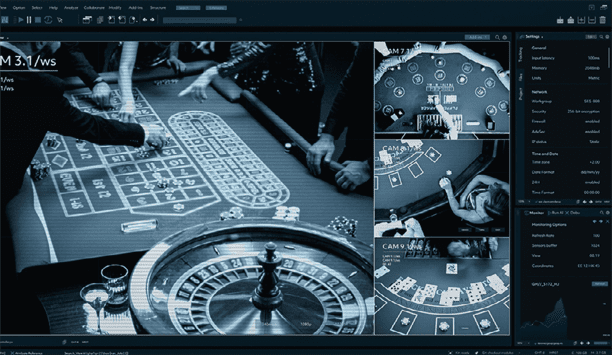

A significant challenge to VCA technology is not simply providing a correct result, but providing the desired result. As Figure 5 shows, providing desired results requires the convergence of three concepts: user intentions, VCA Interpretation, and VCA Results. A perfectly-implemented algorithm would achieve complete overlap between interpretation and results, but this is not sufficient to satisfy user requirements. In order to convince potential users of the value of VCA tools, overlap between the user intention and the software’s interpretation of those intentions must be significant.

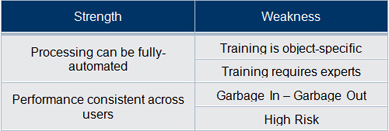

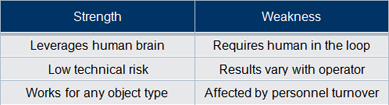

There are two ways to increase the overlap between user intentions and VCA interpretation of those intentions:

1. Train the software

|

2. Train the user

|

We believe that the strengths of user training make this the preferred path toward improving confidence in VCA technology. This means that users must take the time to understand the subtleties and nuances of presented results and experiment with query images to learn how to limit undesirable answers.

Path forward

While we believe that user training is the best path toward improving acceptance, it is incumbent on VCA developers to provide users with useful tools and information. Without insight into what caused the VCA software to return a result, users cannot effectively modify their queries to improve performance. At a minimum, VCA tools should provide the following information:

- What was detected (bounding box)

- Why it was deemed a match (parametric scores)

Such information would provide insight into the VCA tool’s “thought process”, thereby allowing the user to understand which image feature are driving the results. Having provided users with information to better understand search results, software should also provide users with a means to modify those results by accentuating or de-emphasising certain image features.

VCA technology is not ready to autonomously provide perfect, error-free results, but with the right training and user experience, VCA is ready to make significant improvements in video analysts’ workflow.

From facial recognition to LiDAR, explore the innovations redefining gaming surveillance